Ridger AIoAI

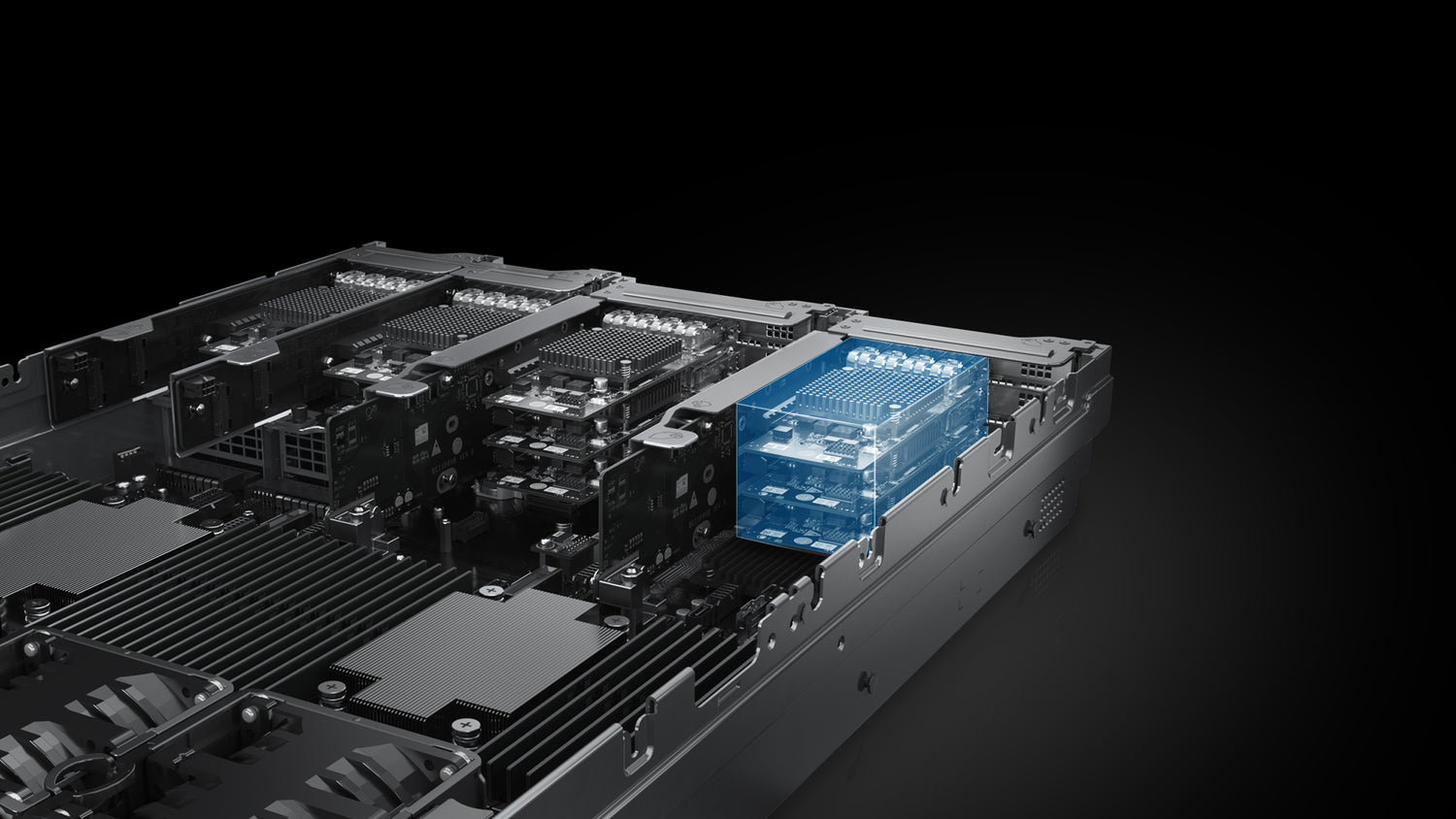

Ridger AIoAI is an LLM Deployment-Native System designed for efficient and cost-effective on-premise deployment of large language models.** By adopting a storage-centric architecture, Ridger introduces a “storage-replaces-compute” approach that reduces training and fine-tuning costs by up to 90%. With built-in API integration and intelligent automation features, it streamlines the deployment process and ensures seamless connection to your business systems—bringing unprecedented affordability, simplicity, and performance to local LLM deployment.

AIoAI, Your First AI Appliance

Zero Wait

Pre loaded ALL for your whole AI proceduces, protect your privacy and meet compliance requirements

Pay Less

No cluster, cut down 90% cost

Click & Run

Automation by quick wizard clicking in web UI

Open & Use

Data Stays Local: Built for Privacy and Compliance

All model operations and data interactions are performed entirely on-premise—no data is uploaded to the cloud, effectively eliminating the risk of data leakage.This architecture fully meets the stringent requirements of sectors such as government, finance, and healthcare, including data sovereignty, auditability, and industry-specific compliance standards.

Flexible Deployment: Low Latency, Fast Fine-Tuning, and Agile Business Adaptation

Compared to cloud-based architectures, on-premise AI deployment delivers near-zero latency—ideal for edge computing, real-time decision-making, and latency-sensitive scenarios.It also enables on-demand fine-tuning and rapid iteration, allowing your models to adapt instantly as business needs evolve—without waiting for cloud-side processes or platform approvals.

Stable and Reliable: Cost-Controlled, Risk-Resistant, and Fully Autonomous

On-premise deployment frees you from reliance on cloud services, eliminating risks such as price hikes, API changes, service disruptions, or even platform shutdowns.With its integrated hardware design, Ridger requires no distributed clusters—drastically reducing both costs and operational overhead.The result is a truly self-contained, stable system that works right out of the box.

Affordable

-

90% reduction in deployment cost

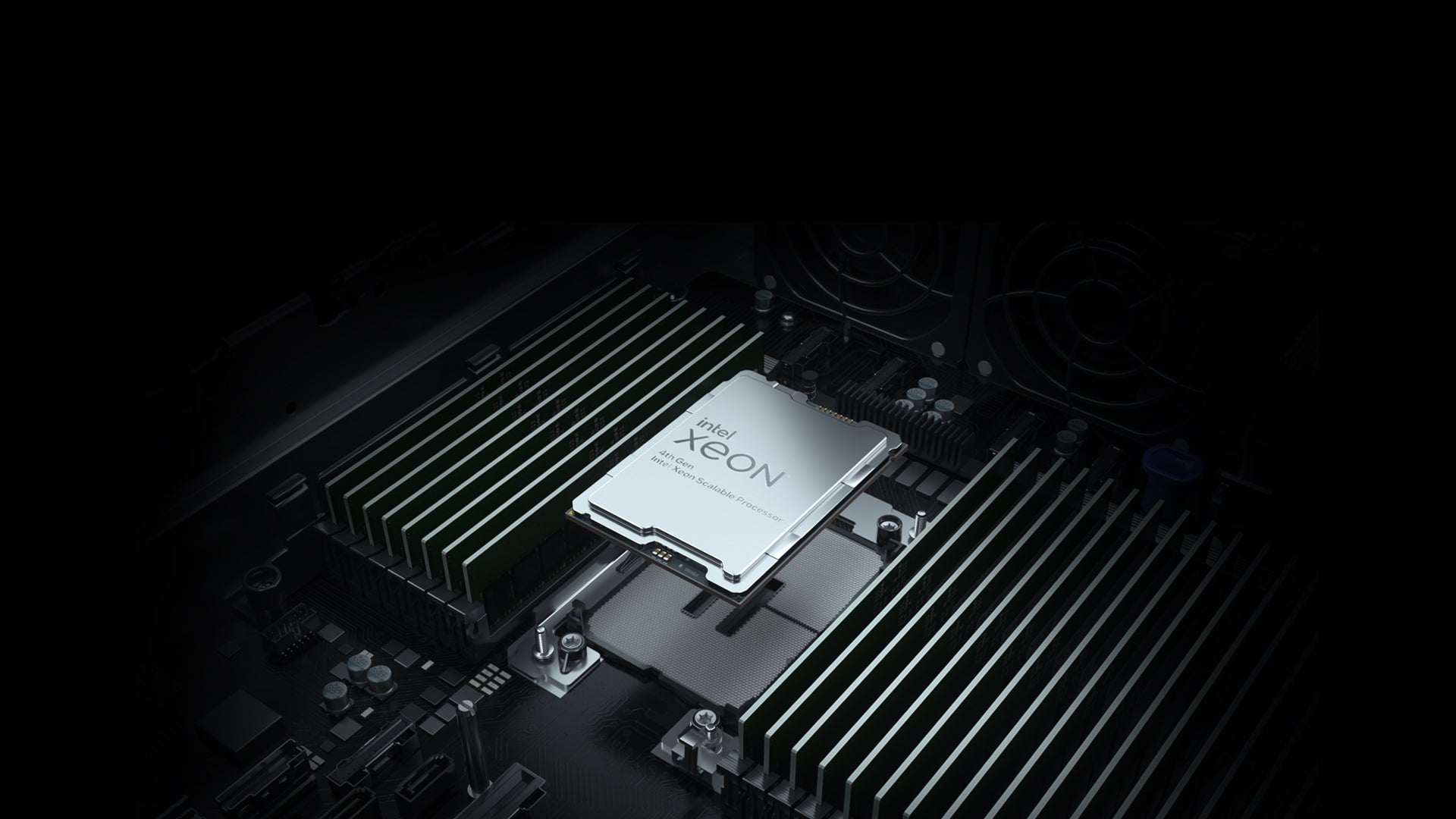

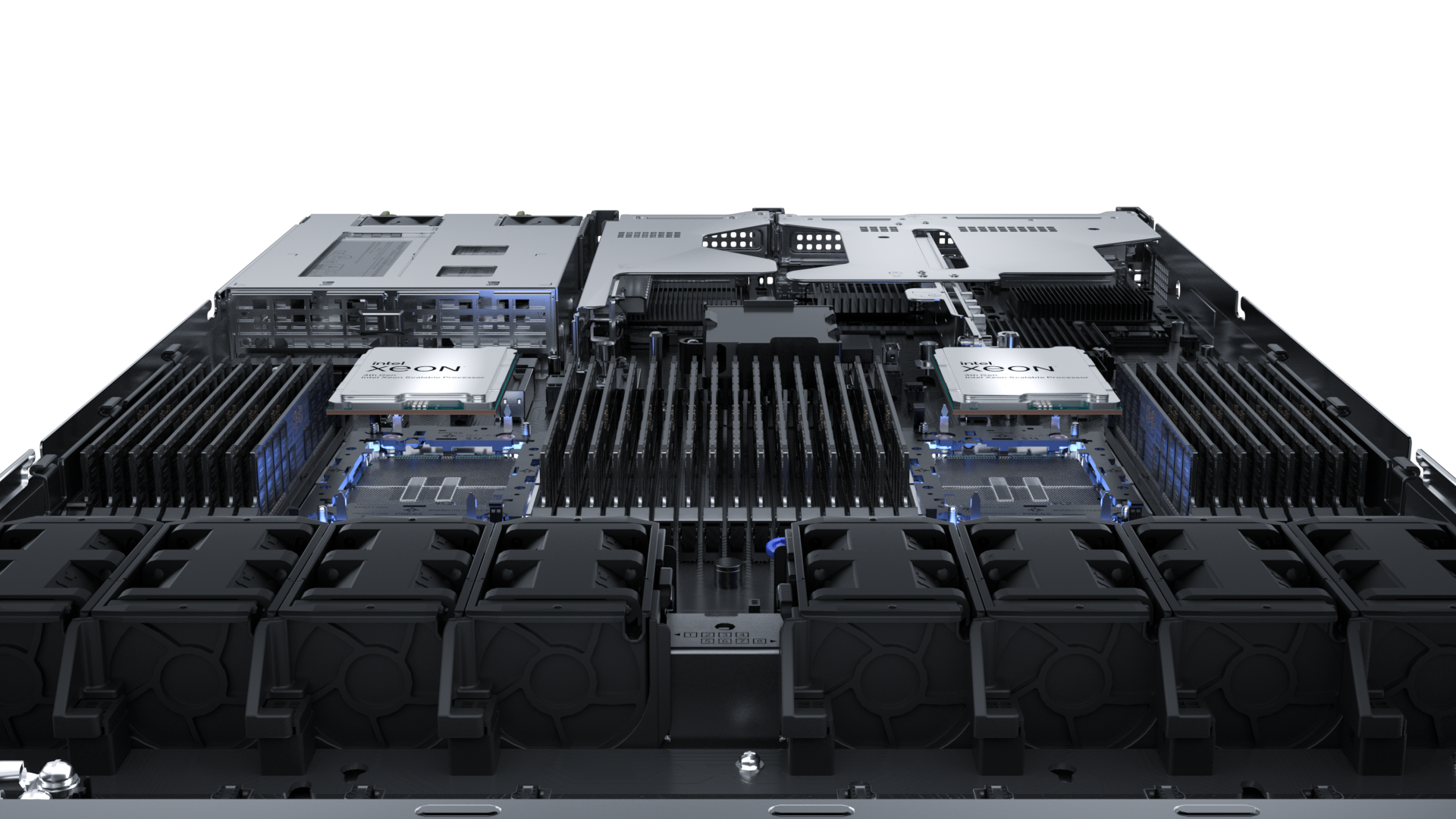

Training a 110B large-scale model traditionally requires a 2,200GB GPU memory pool—equivalent to around 30 high-end GPUs (e.g., 24 H100 cards as used in NVIDIA DGX systems)—deployed across 2–3 units of 4U servers, with a total cost of ¥8–10 million.

In contrast, Ridger’s proprietary AI-HCM architecture enables training the same model with a single blade server equipped with four RTX 5880 GPUs (192GB total memory), reducing hardware cost to just ¥500,000–800,000.

This means over 90% reduction in GPU cost, significantly lowering the entry barrier to LLM training. -

90% lower TCO compared to traditional solutions

Traditional training clusters consume tens of kilowatts of power—driving up electricity costs, requiring electrical and cooling system upgrades, taking up significant space, and increasing maintenance complexity.

Ridger’s proprietary AI-HCM architecture is engineered for energy efficiency from the ground up—significantly reducing TCO (Total Cost of Ownership):- Only 1kW power consumption — saves electricity and avoids costly power upgrades

- No complex cooling or dedicated server room needed — quick to deploy in any environment

- Integrated hardware–software design — fewer components, fewer failures, easier maintenance

Lower energy use, faster deployment, smaller footprint, simpler operations — Ridger compresses long-term operational costs at the source, making large model deployment truly practical and sustainable.

Efficient

Advanced Automation Toolkit

- Automatically detects system configurations and intelligently selects optimal training parameters based on user choices and training data

- Supports one-click reset to revert to recommended default settings instantly

- Offers advanced parameter customization for expert users who need fine-grained control

Model-as-a-Service (MaaS) Engine

- Fully compatible with mainstream large models from 6B to 110B parameters, supporting one-click deployment for instant use

- Seamless integration with models of various sizes and architectures, including LLaMA, DeepSeek, Yi, and more

- Unified scheduling across model architectures, enabling flexible switching and concurrent execution of models at different scales

Ridger’s Custom-Built UI

Ridger’s custom-built UI provides a fully visual workflow—from model import and parameter setup to training and inference validation.

No command-line or coding required—deploy and train multiple models with zero technical barriers.

- Model Repository Management: Easily import, organize, browse, and delete models

- Training Monitoring: Live status updates on training progress, parameters, GPU, and memory usage

- Multi-language Support: Seamless switching between Chinese and English interfaces for global accessibility

- Lightweight Deployment: Web-based interface that runs directly in the browser and is easy to integrate with private compute environments

AIoAI, Your First AI Appliance

Let us help you better

Featured Products

-

RidgeDrive

Regular price ¥206.00 CNYRegular priceUnit price / per -

RidgeScale

Regular price ¥206.00 CNYRegular priceUnit price / per -

AIOAI-Computer

Regular price ¥206.00 CNYRegular priceUnit price / per